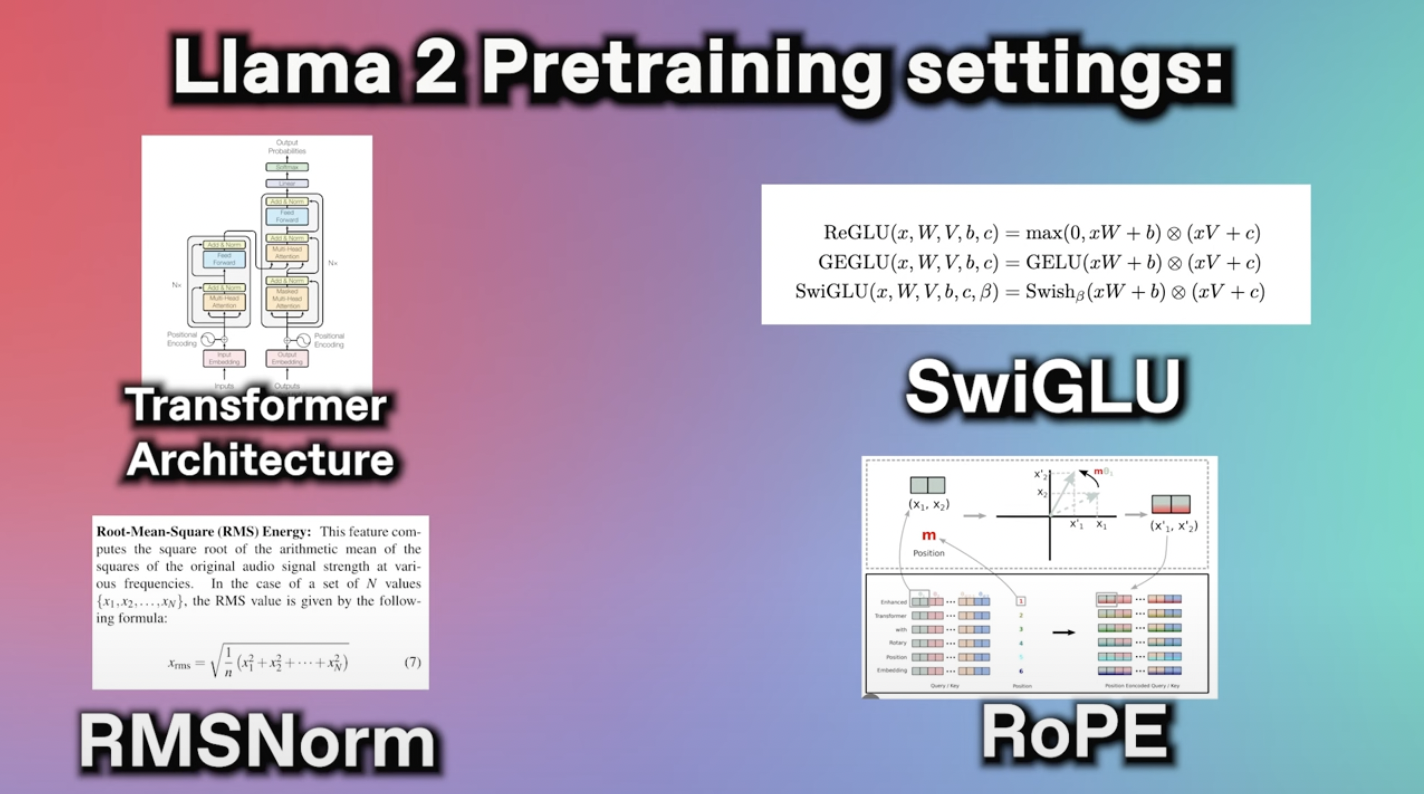

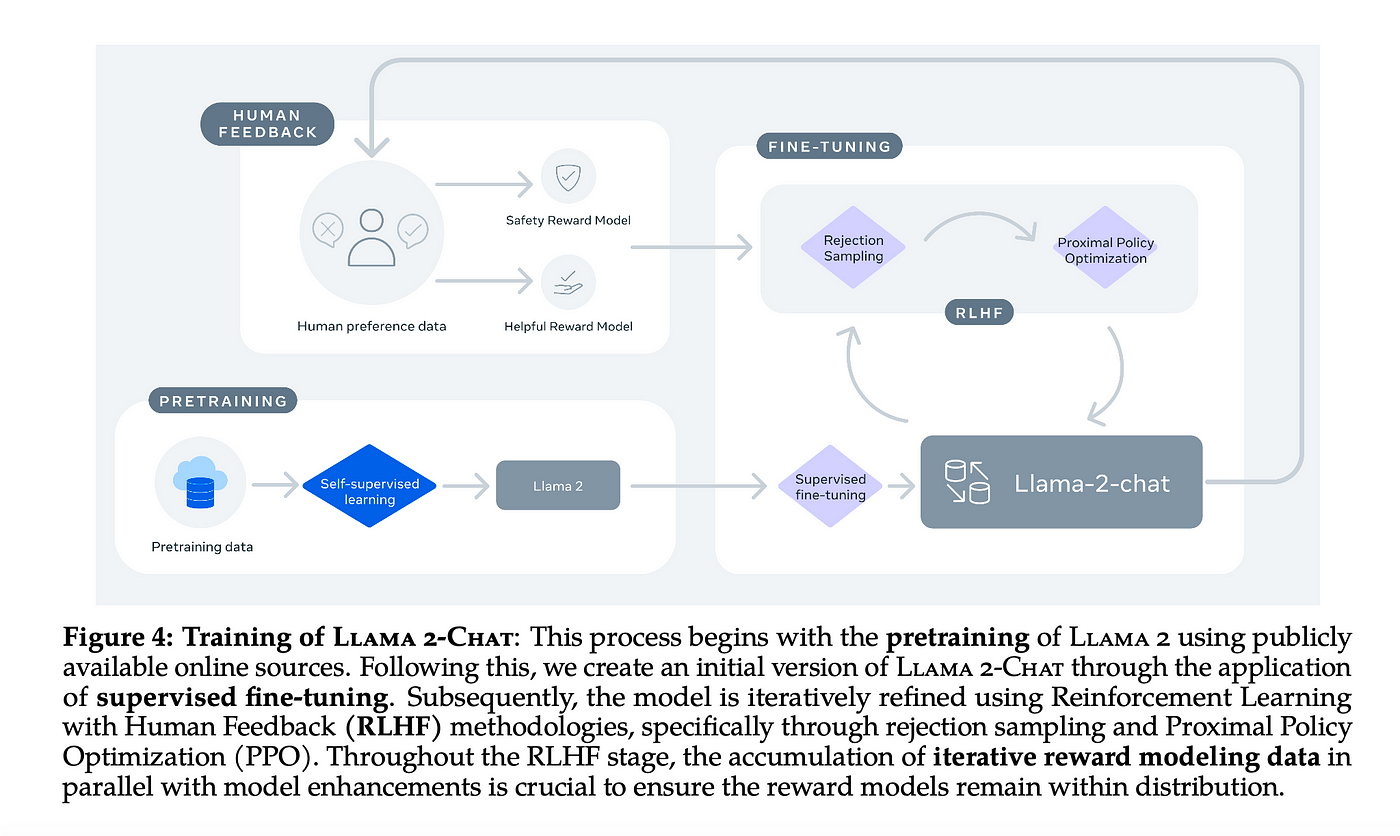

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. Alright the video above goes over the architecture of Llama 2 a comparison of Llama-2 and Llama-1 and finally a comparison of Llama-2 against other non-Meta AI models. Weights for the Llama2 models can be obtained by filling out this form The architecture is very similar to the first Llama with the addition of Grouped Query Attention GQA following this paper. We introduce LLaMA a collection of foundation language models ranging from 7B to 65B parameters We train our models on trillions of tokens and show that it is..

Description This repo contains GPTQ model files for Meta Llama 2s Llama 2 70B. The size of Llama 2 70B fp16 is around 130GB so no you cant run Llama 2 70B fp16 with 2 x 24GB. Token counts refer to pretraining data only All models are trained with a global batch-size of. Hi there guys just did a quant to 4 bytes in GPTQ for llama-2-70B The FP16 weights on HF format had to be re. If youre involved in data science or AI research youre already aware of the immense processing capabilities. GPTQ is not only efficient enough to be applied to models boasting hundreds of billions of parameters but. Discover how to run Llama 2 an advanced large language model on your own machine..

Llama-2-Chat which is optimized for dialogue has shown similar performance to popular closed-source models like ChatGPT and PaLM. We will fine-tune the Llama-2 7B Chat model in this guide Steer the Fine-tune with Prompt Engineering When it comes to fine. LLaMA 20 was released last week setting the benchmark for the best open source OS language model Heres a guide on how you can. Open Foundation and Fine-Tuned Chat Models In this work we develop and release Llama 2 a collection of pretrained and fine-tuned. For LLaMA 2 the answer is yes This is one of its attributes that makes it significant While the exact license is Metas own and not one of the..

This repository contains the code and resources to create a chatbot using Llama 2 as the Large Language Model Pinecone as the Vector Store for efficient similarity search and Streamlit for. Clearly explained guide for running quantized open-source LLM applications on CPUs using LLama 2 C Transformers GGML and LangChain n Step-by-step guide on TowardsDataScience. LangChain is a powerful open-source framework designed to help you develop applications powered by a language model particularly a large language model LLM. The tutorials include related topics langchain llama 2 petals and pinecone. ..

Comments